6.824 学习记录

Patterns and Hints for Concurrency in Go

Lecture 10: Guest Lecture on Go: Russ Cox - YouTube

https://www.youtube.com/watch?v=IdCbMO0Ey9I

Transcript:

okay uh good afternoon good morning good evening good night wherever you are uh let’s get started again uh so uh today we have a guest lecture and probably speaker that needs a little introduction uh uh there’s uh russ cox uh who’s one of the co-leads on the go uh project and you know we’ll talk a lot more about it uh let me say a couple words uh and not try to embarrass russ too much russia has a long experience with distributed systems uh he was a developer and contributor to plan nine uh when he was a high school

student and as an undergrad at harvard he joined the phd program at mit uh which is where we met up and probably if you’re taking any sort of you know pdos class if you will there’s going to be a you will see russ’s touches on it and certainly in 824 you know the the go switch to go for us has been a wonderful thing and uh but if you differ in opinion of course feel free to ask russ questions and make suggestions um he’s always welcome to uh entertain any ideas so with that russ it’s yours great

thanks can you still see the slides is that working okay great so um so we built go to support writing the sort of distributed systems that we were building at google and that made go a great fit for you know what came next which is now called cloud software and also a great fit for a24 um so in this lecture i’m going to try to explain how i think about writing some current programs in go and i’m going to walk through the sort of design and implementation of programs for four different patterns that i see come up often

and along the way i’m going to try to highlight some hints or rules of thumb that you can keep in mind when designing your own go programs and i know the syllabus links to an older version of these slides so you might have seen them already i hope that the lecture form is a bit more intelligible than just sort of looking at the slides um and i hope that in general these patterns are like common enough that you know maybe they’ll be helpful by themselves but also that you know you’ll you’ll the hints will help you prepare

for whatever it is you need to implement so to start it’s important to distinguish between concurrency and parallelism and concurrency is about how you write your programs about being able to compose independently executing control flows whether you want to call them processes or threads or go routines so that your program can be dealing with lots of things at once without turning into a giant mess on the other hand parallelism is about how the programs get executed about allowing multiple computations to run

simultaneously so that the program can be doing lots of things at once not just dealing with lots of things at once and so concurrency lends itself naturally to parallel execution but but today the focus is on how to use go’s concurrency support to make your programs clearer not to make them faster if they do get faster that’s wonderful but but that’s not the point today so i said i’d walk through the design and implementation of some programs for four common concur excuse me concurrency patterns that i see often

but before we get to those i want to start with what seems like a really trivial problem but that illustrates one of the most important points about what it means to use concurrency to structure programs a decision that comes up over and over when you design concurrent programs is whether to represent states as code or as data and by as code i mean the control flow in the program so suppose we’re reading characters from a file and we need to scan over a c style quoted string oh hello so the slides aren’t changing

yeah it will they well can you see prologue gorgeous for state right now no we see the title slide oh no yeah i was wondering about that because um there was like a border around this thing when i started and then it went away so let me let me just unshare and reshare i have to figure out how to do that in zoom uh unfortunately the keynote menu wants to be up and i don’t know how to get to the zoom menu um ah my screen sharing is paused why is my screen sharing paused can i resume there we go yeah all right i don’t know the zoom box says

your screen sharing is paused so if that now now the border’s back so i’ll watch that all right so um see i was back here so so you know we’re reading a string it’s not a parallel program it’s reading one character at a time so there’s no opportunity for parallelism but there is a good opportunity for concurrency so if we don’t actually care about the exact escape sequences in the string what we need to do is match this regular expression and we don’t have to worry about understanding it exactly

we’ll come back to what it means but but that’s basically all you have to do is implement this regular expression and so you know you probably all know you can turn a regular expression into a state machine and so we might use a tool that generates this code and in this code there’s a single variable state that’s the state of the machine and the loop goes over the state one character at a time reads a character depending on the state and the character changes to a different state until it gets to the end

and so like this is a completely unreadable program but it’s the kind of thing that you know an auto-generated program might look like and and the important point is that the program state is stored in data in this variable that’s called state and if you can change it to store the state in code that’s often clearer so here’s what i mean um suppose we duplicate the read care calls into each case of the switch so we haven’t made any semantic changes here we just took the read care that was at

the top and we moved it into the middle now instead of setting state and then immediately doing the switch again we can change those into go to’s and then we can simplify a little bit further there’s a go to state one that’s right before the state one label we can get rid of that then there’s a um i guess yeah so then there’s uh you know there’s only one way to get to state two so we might as well pull the state two code up and put it inside the if where the go to appears and then you know both sides of that if

now end in go to state one so we can hoist that out and now what’s left is actually a pretty simple program you know state zero is never jumped to so it just begins there and then state one is just a regular loop so we might as well make that look like a regular loop um and now like this is you know looking like a program and then finally we can you know get rid of some variables and simplify a little bit further and um and we can rotate the loop so that you know we don’t do a return true in the middle of the loop we do the

return true at the end and so now we’ve got this program that is actually you know reasonably nice and it’s worth mentioning that it’s possible to clean up you know much less egregious examples you know if you had tried to write this by hand your first attempt might have been the thing on the left where you’ve got this extra piece of state and then you can apply the same kinds of transformations to move that state into the actual control flow and end up at the same program that we have on the right that’s cleaner

so this is you know a useful transformation to keep in mind anytime you have state that kind of looks like it might be just reiterating what’s what’s happening in the program counter um and so you know you can see this if the the origin in the original state like if state equals zero the program counter is at the beginning of the function and if state equals one or if an escape equals false and the other version the per encounter is just inside the for loop and state equals two is you know further down in the for loop

and the benefit of writing it this way instead of with the states is that it’s much easier to understand like i can actually just walk through the code and explain it to you you know if you just read through the code you read an opening quote and then you start looping and then until you find the closing quote you read a character and if it’s a backslash you skip the next character and that’s it right you can just read that off the page which you couldn’t do in the original this version also happens to run

faster although that doesn’t really matter for us um but as i mentioned i’m going to highlight what i think are kind of important lessons as hints for designing your own go programs and this is the first one to convert data state into code state when it makes your programs clearer and again like these are all hints you should you shouldn’t you know for all of these you should consider it as you know only if it helps you can decide so one problem with this hint is that not all programs have the luxury of

having complete control over their control flow so you know here’s a different example instead of having a read care function that can be called this code is written to have a process care method that you have to hand the character to one at a time and then process care has no choice really but to you know encode its state in an explicit state variable because after every character it has to return back out and so it can’t save the state in the program counter in the stack it has to have the state in an actual variable but

in go we have another choice right because we can’t save the state on that stack and in that program counter but you know we can make another go routine to hold that state for us so supposing we already have this debugged read string function that we really don’t want to rewrite in this other way we just want to reuse it it works maybe it’s really big and hairy it’s much more complicated than the thing we saw we just want to reuse it and so the way we can do that and go is we can start a new go routine

that does the read string part and it’s the same read string code as before we pass in the character reader and now here the um you know the init method makes this this go routine to do the character reading and then every time the process care method is called um we send a message to the go routine on the car channel that says here’s the next character and then we receive a message back that says like tell me the current status and the current status is always either i need more input or you know it basically you know was it okay or not

and so um you know this lets us move the this the program counter that we we couldn’t do on the first stack into the other stack of the go routine and so using additional go teams is a great way to hold additional code state and give you the ability to do these kinds of cleanups even if the original structure the product the problem makes it look like you can’t but go ahead i i assume you’re fine with uh people asking questions yeah absolutely i just wanted to make sure that yeah yeah definitely please

interrupt um and so so the hint here is to use additional go routines to hold additional code state and there’s there’s one caveat to this and then it’s not free to to just make go routines right you have to actually make sure that they exit because otherwise you’ll just accumulate them and so you do have to think about uh you know why does the go routine exit like you know is it going to get cleaned up and in this case we know that you know q dot parse is going to return where you know parse go

sorry that’s not right um oh sorry the read string here read string is going to return any time it sends a a message that says need more input where’d it go there’s something missing from this slide sorry i went through this last night um so so as we go in we go into init we kick off this go routine it’s going to call read care a bunch of times and then we read the status once and that that first status is going to happen because the the first call to read care from read string is going to send i need

more input and then we’re going to send a character back um we’re going to send the character back in process care and then every time process care gets called it returns a status and so up until you get um you know need more input you’re going to get the um uh sorry this is not working um you’re going to get any more input for every time you want to read a character and then when it’s done reading characters what i haven’t shown you here what seems to be missing somehow is when things exit and when

things exit let’s see if it’s on this slide yeah so there’s a return success and a return bad input that i’d forgotten about and so uh you know these return a different status and then they’re done so when process care uh you know in in the read stream version when it returns you know bad input or success we we say that you know it’s done and so as long as the caller is going through and um you know calling until it gets something that’s not need more input then the go routine will finish but you

know maybe if we stop early if the caller like hits an eof and stops on its own without telling us that it’s done there’s a go routine left over and so that could be a problem and so you just you need to make sure that you know when and why each go routine will exit and the nice thing is that if you do make a mistake and you leave guardians stuck they just sit there it’s like the best possible bug in the world because they just sit around waiting for you to look at them and all you have to do is remember to

look for them and so you know here’s a very simple program at least go routines and it runs an http server and so you know if we run this it kicks off a whole bunch of effort routines and they all uh block trying to send to a channel and then it makes the http server and so if i run this program it just sits there and if i type control backslash on a unix system i get a sig quit which makes it crash and dump all the stacks of the go routines and you can see on the slide that you know it’s going to print over and over

again here’s the go routine in h called from g called from f and and in a channel send and if you look at the line numbers you can see exactly where they are another option is that since we’re in an http server and the hp server imports the net http prof package you can actually just visit the http server’s debug pprofgoreteen url which gives you the stacks of all the running go routines and unlike the crash dump it takes a little more effort and it deduplicates the go routines based on their stacks

and so and then it sorts them by how many there are of each stack and so if you have a go routine leak the leak shows up at the very top so in this case you’ve got 100 go routines stuck in h called from g call from f and then we can see there’s like one of a couple other go routines and we don’t really care about them and so you know this is a new hint that it just it’s really really useful to look for stucco routines by just going to this end point all right so that was kind of the warm-up now i

want to look at the first real concurrency pattern which is a publish subscribe server so publish subscribe is a way of structuring a program that you decouple the parts that are publishing interesting events from the things that are subscribing to them and there’s a published subscriber pub sub server in the middle that connects those so the individual publishers and the individual subscribers don’t have to be aware of exactly who the other ones are so you know on your android phone um an app might publish a make a phone

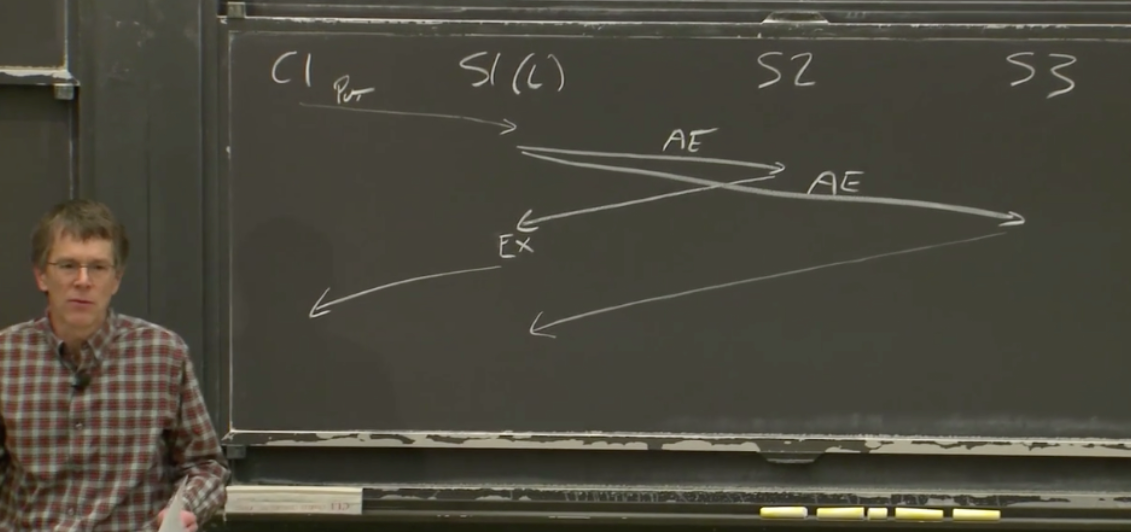

call event and then the the dialer might subscribe to that and actually start and you know help dial and and so in a real pub sub server there are ways to filter events based on like what kind they are so that when you publish and make a phone call event like it doesn’t go to your email program but you know for now we’re just going to assume that the filtering is taken care of separately and we’re just worried about the actual publish and subscribe and the concurrency of that so here’s an api we want to implement with any number of

clients that can call subscribe with a channel and afterwards events that are published will be sent on that channel and then when a client is no longer interested it can call cancel and pass in the same channel to say stop sending me events on that channel and the way that cancel will signal that it really is done sending events on that channel is it will close the channel so that the the receive the caller can can keep receiving events until it sees the channel get closed and then it knows that the cancel has taken effect

um so notice that the information is only flowing one way on the channel right you can send to the channel and then it the receiver can receive from it and the information flows from the sender to the receiver and it never goes the other way so closing is also a signal from the sender to the receiver but all the sending is over the receiver cannot close the channel to tell the sender like i don’t want you to send anymore because that’s information going the opposite direction and it’s just a lot easier to reason about

if the information only goes one way and of course if you need communication in both directions you can use a pair of channels and it often turns out to be the case that those uh different directions may have different types of data flowing like before we saw that there were runes going in one direction and status updates going in the other direction so how do we implement this api here’s a pretty basic implementation that you know could be good enough we have a server and the server state is a map of registered subscriber channels

protected by a lock we initialize the server by just allocating the map and then to publish the event we just send it to every registered channel to subscribe a new channel we just add it to the map and to cancel we take it out of the map and then because these are all um these are all methods that might be called from multiple go routines um we need to call lock and unlock around these to um you know protect the map and notice that i wrote defer unlock right after the lock so i don’t have to remember to unlock it later

uh you’ve probably all seen this you know it’s sort of a nice idiom to just do the lock unlock and then you know have a blank line and have that be its own kind of paragraph in the code one thing i want to point out is that using defer makes sure that the mutex gets unlocked even if you have multiple returns from the function so you can’t forget but it also makes sure that it gets unlocked if you have a panic like in subscribe and cancel where there’s you know panics for misuse and there is a subtlety here about if

you might not want to unlock the mutex if the panic happened while the thing that was locked is in some inconsistent state but i’m going to ignore that for now in general you try to avoid having the the things that might panic happen while you’re you know potentially an inconsistent state and i should also point out that the use of panic at all in subscribe and cancel implies that you really trust your clients not to misuse the interface that it is a program error worth you know tearing down the entire program

potentially for that to happen and in a bigger program where other clients were using this api you’d probably want to return an error instead and not have the possibility of taking down the whole program but panicking simplifies things for now and you know error handling in general is kind of not the topic today a more important concern with this code than panics is what happens if a go routine is slow to receive events so all the operations here are done holding the mutex which means all the clients kind of have to proceed in

lockstep so during publish there’s a loop that’s sending on the channels sending the event to every channel and if one subscriber falls behind the next subscriber doesn’t get the event until that slow subscriber you know wakes up and actually gets the the event off off that channel and so one slow subscriber can slow down everyone else and you know forcing them to proceed in lockstep this way is not always a problem if you’ve you know documented the restriction and for whatever reason you know how the

clients are are written and you know that they won’t ever fall too far behind this could be totally fine it’s a really simple implementation and um and it has nice properties like on return from publish you know that the event has actually been handed off to each of the other grow routines you don’t know that they’ve started processing it but you know it’s been handed off and so you know maybe that’s good enough and you could stop here a second option is that if you need to tolerate just a little bit of slowness

on the the subscribers then you could say that they need to give you a buffered channel with room for a couple events in the buffer so that you know when you’re publishing you know as long as they’re not too far behind there’ll always be room for the new event to go into the channel buffer and then the actual publish won’t block for too long and again maybe that’s good enough if you’re sure that they won’t ever fall too far behind you get to stop there but in a really big program you do want to cope more gracefully with

arbitrarily arbitrarily slow subscribers and so then the question is what do you do and so you know in general you have three options you can slow down the event generator which is what the previous solutions implicitly do because publish stops until the subscribers catch up or you can drop events or you can queue an arbitrary number of past events those are pretty much your only options so we talked about you know publish and slowing down the event generator there’s a middle ground where you coalesce the events or you drop them

um so that you know the subscriber might find out that you know hey you missed some events and i can’t tell you what they were because i didn’t save them but but i’m at least going to tell you you missed five events and then maybe it can do something else to try to catch up and this is the kind of approach that um that we take in the profiler so in the profiler if you’ve used it if uh there’s a go routine that uh fills the profile on on a signal handler actually with profiling events and then there’s a

separate go routine whose job is to read the data back out and like write it to disk or send it to a http request or whatever it is you’re doing with profile data and there’s a buffer in the middle and if the receiver from the profile data falls behind when the buffer fills up we start adding entries to a final profile entry that just has a single entry that’s that’s a function called runtime.

lost profile data and so if you go look at the profile you see like hey the program spent five percent of its time in lost profile data that just means you know the the profile reader was too slow and it didn’t catch up and and we lost some of the profile but we’re clear about exactly you know what the error rate is in the profile and you pretty much never see that because all the readers actually do keep up but just in case they didn’t you have a pretty clear signal um an example of purely dropping the events is the os signal package

where um you have to pass in a channel that will be ready to receive the signal a signal like sig hop or sig quit and when the signal comes in the run time tries to send to each of the channels that subscribe to that signal and if it can’t send to it it just doesn’t it’s just gone um because you know we’re in a signal handler we can’t wait and so what the callers have to do is they have to pass in a buffered channel and if they pass in a buffered channel that has you know length at least one

buffer length at least one and they only register that channel to a single signal then you know that if a signal comes in you’re definitely going to get told about it if it comes in twice you might only get told about it once but that’s actually the same semantics that unix gives to processes for signals anyway so that’s fine so those are both examples of dropping or coalescing events and then the third choice is that you might actually just really not want to lose any events it might just be really important that you

never lose anything in which case you know you can queue an arbitrary number of events you can somehow arrange for the program to just save all the events that the you know slow subscriber hasn’t seen yet somewhere and and give them to the subscriber later and it’s really important to think carefully before you do that because in a distributed system you know there’s always slow computers always computers that have fallen offline or whatever and they might be gone for a while and so you don’t want to introduce

unbounded queuing in general you want to think very carefully before you do that and think well you know how unbounded is it really and can i tolerate that and so like that’s a reason why channels don’t have just an unbounded buffering it’s really almost never the right choice and if it is the right choice you probably want to build it very carefully um and so but we’re going to build one just to see what it would look like and before we do that i just want to adjust the program a little bit so we have this mutex in the code

and the mutex is an example of of keeping the the state whether you’re locked or not in a state variable but we can also move that into a program counter variable by putting it in a different go routine and so in this case we can start a new go routine that runs a program a function called s dot loop and it handles requests sent on three new channels publish subscribe and cancel and so in init we make the channels and then we we kick off s dot loop and s dot loop is sort of the amalgamation of the previous method

bodies and it just receives from any of the three channels a request a publish a subscriber a cancel request and it does whatever was asked and now that map the subscriber map can be just a local variable in s dot loop and and so um you know it’s the same code but now that data is clearly owned by s.

loop nothing else could even get to it because it’s a local variable and then we just need to change the original methods to send the work over to the loop go routine and so uppercase publish now sends on lowercase publish the channel the event that it wants to publish and similarly subscribe and cancel they create a request that has a channel uh that we want to subscribe and also a channel to get the answer back and they send that into the loop and then the loop sends back the answer and so i referred to transforming the program this way as like converting the

mutex into a go routine because we took the data state of the mutex there’s like a lock bit inside it and now that lock bit is implicit in the program counter of the loop um it’s very clear that you can’t ever have you know a publish and subscribe happening at the same time because it’s just single threaded code and just you know executes in sequence on the other hand the the original version had a kind of like clarity of state where you could sort of inspect it and and reason about well this is the

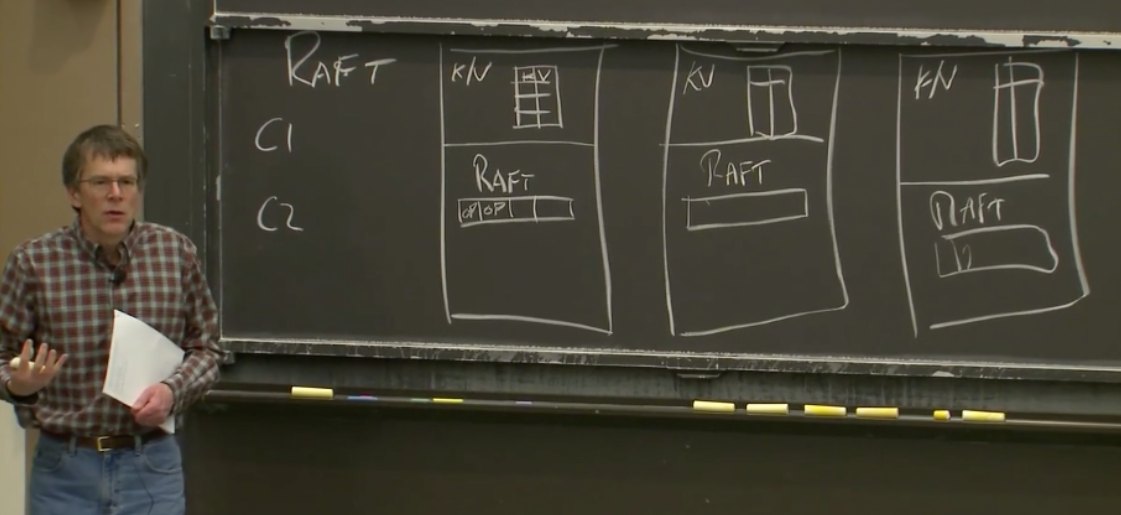

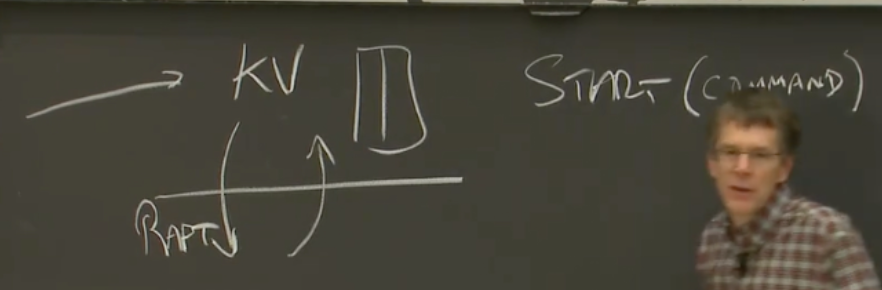

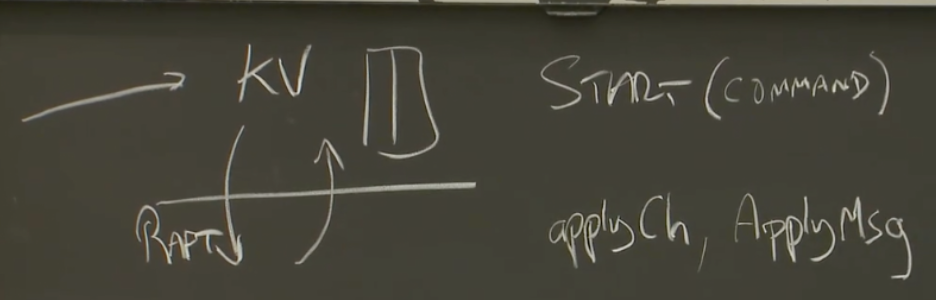

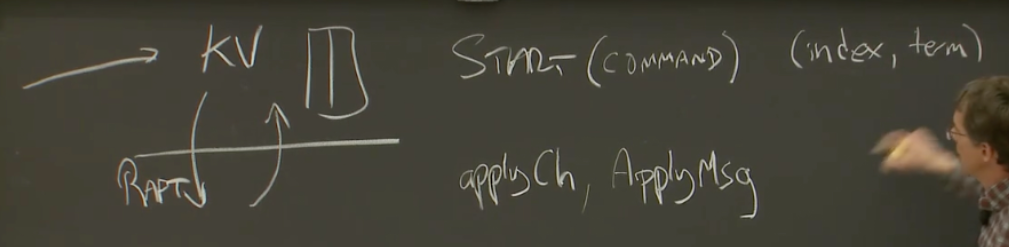

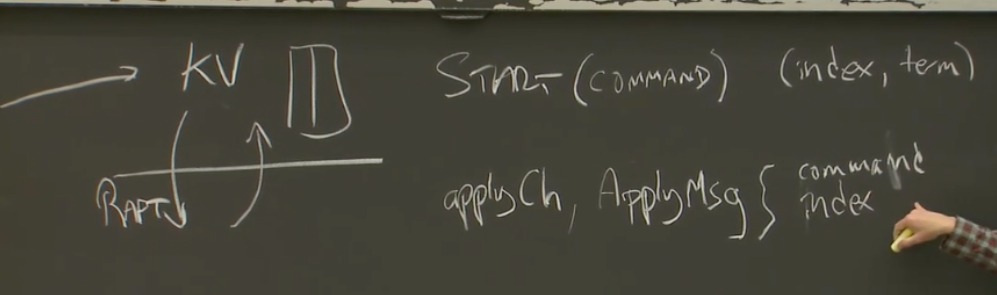

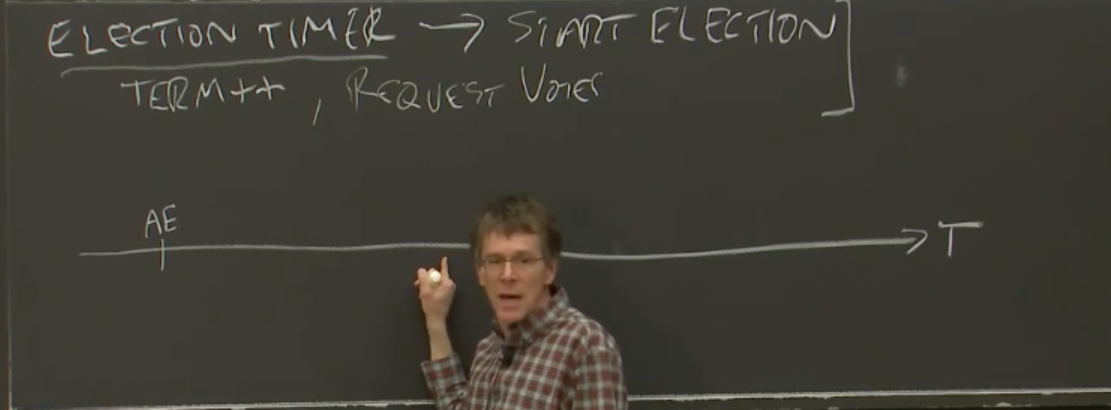

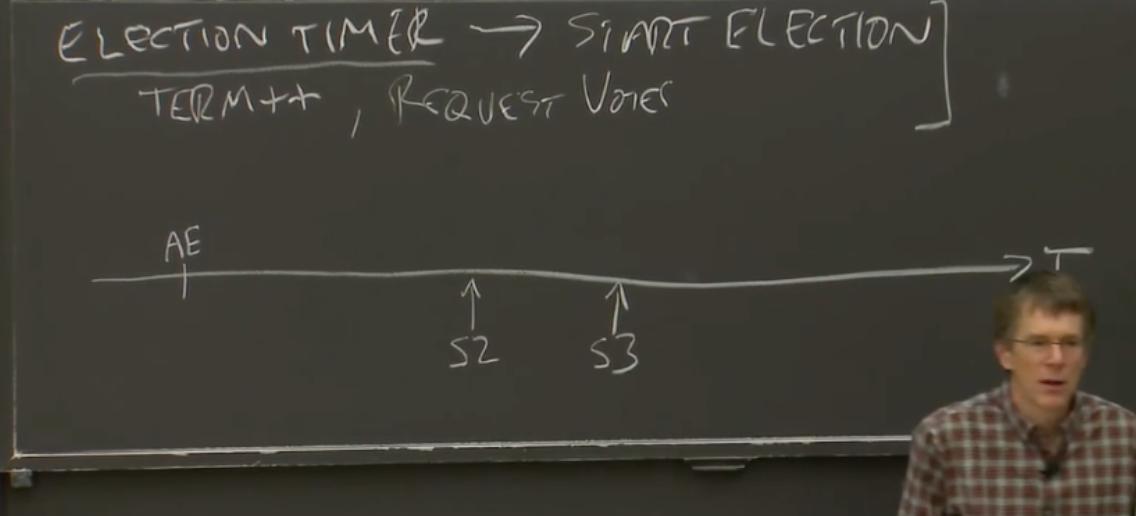

important state and and it’s harder in the go routine version to see like what’s important state and what’s kind of incidental state from just having a go routine and in a given situation you know one might be more important than the other so a couple years ago i did all the labs for the class when it first switched to go and and raft is a good example of where you probably prefer the state with the mutex is because raft is is so different from most concurrent programs and that like each replica is just kind of profoundly

uncertain of its state right like the state transitions you know one moment you think you’re the leader and the next moment you’ve been deposed like one moment your log has ten entries the next moment you find actually no it only has two entries and so being able to manipulate that state directly rather than having to you know somehow get it in and out of the program counter makes a lot more sense for raft but that’s pretty unique in most situations it cleans things up to put the state in the program counter

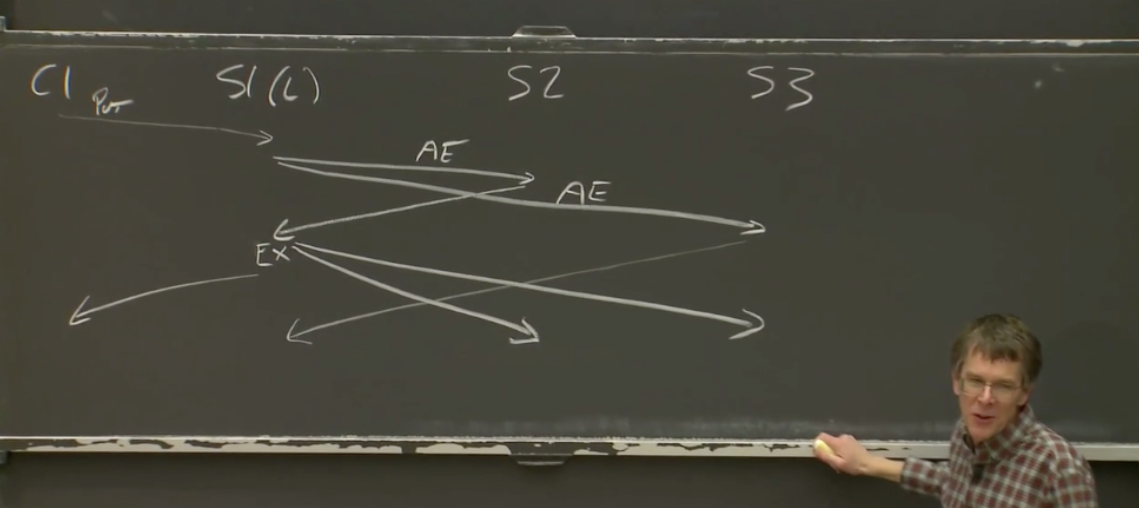

all right so in order to deal with the slow subscribers now we’re going to add some helper go routines and their job is to manage a particular subscriber’s backlog and keep the overall program from blocking and so this is the helper go team and the the the main loop go routine will send the events to the helper which we then trust because we wrote it not to fall arbitrarily behind and then the helpers job is to cue events if needed and send them off to the subscriber all right so this actually has um two

problems the first is that if there’s nothing in the queue then the select is actually wrong to try to offer q of zero and in fact just evaluating q of zero at the start of the select will panic because the queue is empty and so we can fix these by setting up the arguments separately from the select and in particular we need to make a channel send out that’s going to be nil which is never able to proceed in a select um as we know when we don’t want to send and it’s going to be the actual out channel when we do want to send and

then we have to have a separate variable that holds the event that we’re going to send it will only you know actually read from q of 0 if there’s something in the queue the second thing that’s wrong is that we need to handle closing of the channel of the input channel because when the input channel closes we need to flush the rest of the queue and then we need to close the output channel so to check for that we change the select from just doing e equals receive from n to e comma okay equals receive from n and the comma

okay we’ll be told whether or not the channel is actually sending real data or else it’s closed and so when okay is false we can set into nil to say let’s stop trying to receive from in there’s nothing there we’re just going to keep getting told that it’s closed and then when the loop is fine when the queue is finally empty we can exit the loop and so we change the for condition to say we want to keep exiting the loop as long as there actually still is an input channel and there’s something

to write back to the output channel and then once both of those are not true anymore it’s time to close it’s time to exit the loop and we close the output channel and we’re done and so now we’ve correctly propagated the closing of the input channel to the output channel so that was the helper and the server loop used to look like this and to update it we just changed the subscription map before it was a map from subscribe channels to bools it was just basically a set and now it’s a map from subscribe

channel to helper channel and every time we get a new subscription we make a helper channel we kick off a helper go routine and we record the helper channel in the subscription map instead of the the actual channel and then the rest of uh the rest of the the loop actually barely changes at all so i do want to point out that like if you wanted to have a different strategy for you know what you do with uh clients that fall too far behind that can all go in the helper go routine the code on the screen right now is completely unchanged so we’ve we’ve

completely separated the publish subscribe maintaining the the actual list of subscribers map from the what do you do when things get too slow map or problem and so it’s really nice that you’ve got this clean separation of concerns into completely different go routines and that can help you you know keep your program simpler and so that’s the general hint is that you can use go routines a lot of the time to separate independent concerns all right so um the second pattern for today is a work scheduler

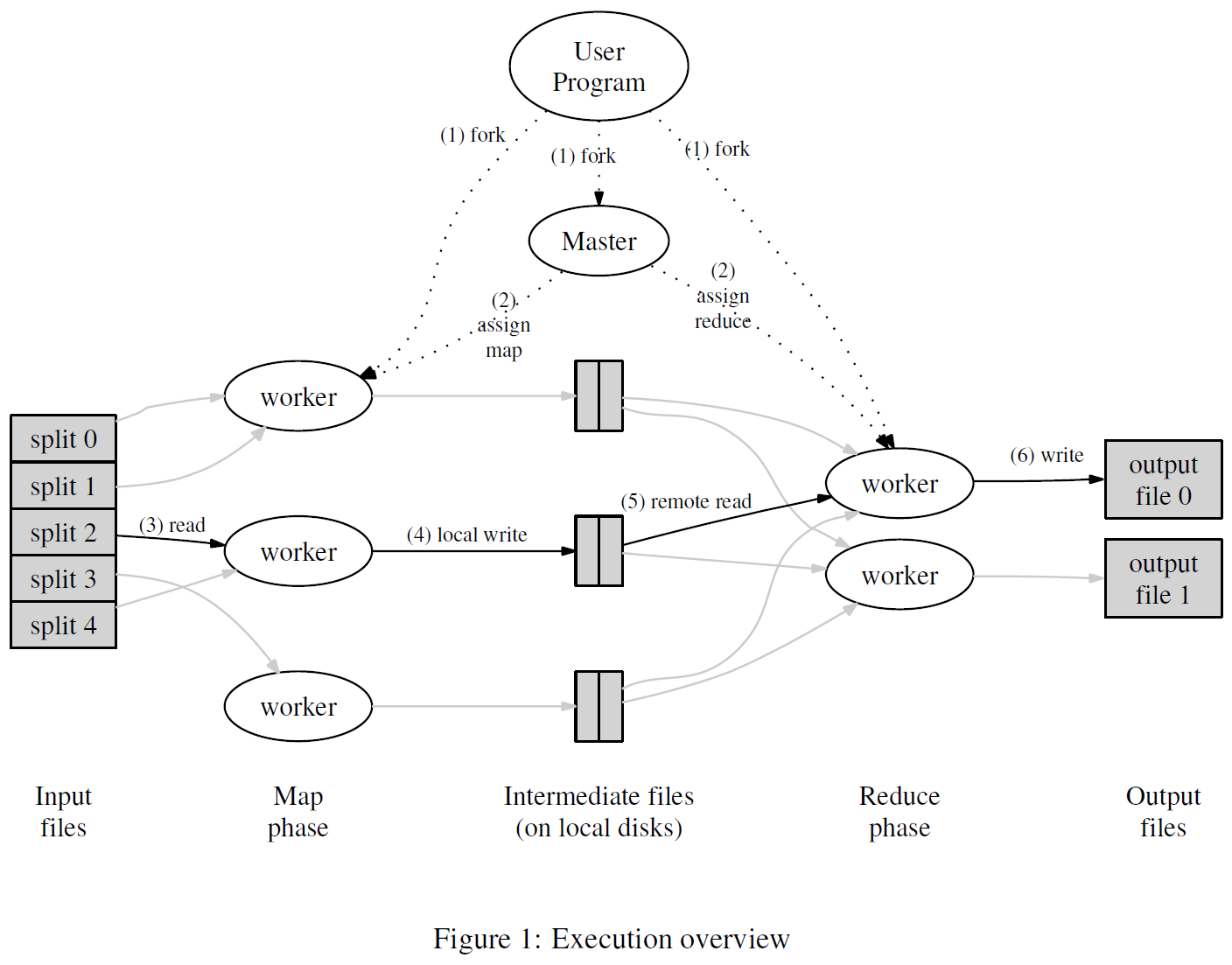

and you did one of these in lab one for mapreduce and i’m just gonna you know build up to that and and this doesn’t do all the rpc stuff it just kind of assumes that there’s kind of channel channel based interfaces to all the the servers so you know we have this function scheduled it takes a fixed list of servers has a number of tasks to run and it has just this abstracted function call that you you call to run the task on a specific server you can imagine it was you know doing the rpcs underneath so we’re going to need some way to keep

track of which servers are available to execute new tasks and so one option is to use our own stack or queue implementation but another option is to use a channel because it’s a good synchronized queue and so we can send into the channel to add to the queue and receive from it to pop something off and in this case we’ll make the queue be a queue of servers and we’ll start off it’s a queue of idle servers servers that aren’t doing any work for us right now and we’ll start off by just initializing

it by sending all the known servers into the idle list and then we can loop over the tasks and for every task we kick off a go routine and its job is to pull a server off the idle list run the task and then put the server back on and this loop body is another example of the earlier hint to use guaranteeing select independent things run independently because each task is running as a separate concern they’re all running in parallel unfortunately there are two problems with this program the first one is that the closure that’s

running as a new go routine refers to the loop iteration variable which is task and so by the time the go routine starts exiting you know the loop has probably continued and done at task plus plus and so it’s actually getting the wrong value of task you’ve probably seen this by now um and of course the best way to to catch this is to run the race detector and at google we even encourage teams to set up canary servers that run the race detector and split off something like you know 0.

1 percent of their traffic to it just to catch um you know races that might be in the production system and you know finding a bug with a race detector is is way better than having to debug some you know corruption later so there are two ways to fix this race the first way is to give the closure an explicit parameter and pass it in and the go statement requires a function call specifically for this reason so that you can set specific arguments that get evaluated in the context of the original go routine and then get copied to the new

go routine and so in this case we can declare a new argument task two we can pass task to it and then inside the go routine task 2 is a completely different copy of of task and i only named it task 2 to make it easier to talk about but of course there’s a bug here and the bug is that i forgot to update task inside the function to refer to task two instead of task and so we basically never do that um what we do instead is we just give it the same name so that it’s impossible now for the code inside the go regime to

refer to the wrong copy of task um that was the first way to fix the race there’s a second way which is you know sort of cryptic the first time you see it but it amounts to the same thing and that is that you just make a copy of the the variable inside the loop body so every time a colon equals happens that creates a new variable so in the for loop in the outer for loop there’s a colon equals at the beginning and there’s not one the rest of the loop so that’s all just one variable for the entire loop

whereas if we put a colon equals inside the body every time we run an iteration of the loop that’s a different variable so if the guard if the go function closure captures that variable those will all be distinct so we can do the same thing we do task two and this time i remember to update the body but you know just like before it’s too easy to forget to update the body and so typically you write task colon equals task which looks kind of magical the first time you see it but but that’s what it’s for

all right so i said there were two bugs in the program the first one was this race on task and the second one is that uh we didn’t actually do anything after we kicked off all the tasks we’re not waiting for them to be done um and and in particular uh we’re kicking them off way too fast because you know if there’s like a million tasks you’re going to kick off a million guard teams and they’re all just going to sit waiting for one of the five servers which is kind of inefficient and so what

we can do is we can pull the fetching of the the next idle server up out of the go routine and we pull it up out of the go routine now we’ll only kick off a go routine when there is a next server to use and then we can kick it off and and you know use that server and put it back and the using the server and put it back runs concurrently but doing the the fetch of the idle server inside the loop slows things down so that there’s only ever now number of servers go routines running instead of number of tasks

and that receive is essentially creating some back pressure to slow down the loop so it doesn’t get too far ahead and then i mentioned we have to wait for the task to finish and so we can do that by just at the end of the loop uh going over the the list again and pulling all the servers out and we’ve pulled you know the right number of servers out of the idle list that means they’re all done and so that’s that’s the full program now to me the most important part of this is that you still get to write a for

loop to iterate over the tasks there’s lots of other languages where you have to do this with state machines or some sort of callbacks and you don’t get the luxury of encoding this in the control flow um and so this is a you know much cleaner way where you can just you know use a regular loop but there are some some changes we could make some improvements and so one improvement is to notice that there’s only one go routine that makes requests of a server at a particular time so instead of having one go routine per

task maybe we should have one go routine per server because there are probably going to be fewer servers than tasks and to do that we have to change from having a channel of idle servers to a channel of you know yet to be done tasks and so we’ve renamed the idle channel to work and then we also need a done channel to count um you know how many uh tasks are done so that we know when we’re completely finished and so here there’s a new function run tasks and that’s going to be the per server function and we kick off one of

them for each server and run tasks his job is just to loop over the work channel run the tasks and when the server is done we send true to done and the you know the server tells us that you know it’s done and the server exits when the work channel gets closed that’s what makes that for loop actually stop so then you know having kicked off the servers we can then just sit there in a loop and send each task to the work channel close the work channel and say hey there’s no more work coming all the servers you should finish and then and

then exit and then wait for all the servers to tell us that they’re done so in the lab there were a couple complications one was that you know you might get new servers at any given time um and so we could change that by saying the servers come in on a channel of strings and and that actually fits pretty well into the current structure where you know when you get a new server you just um kick off a new uh run tasks go routine and so the only thing we have to change here is to put that loop into its own go routine so that while

we’re sending tasks to servers we can still accept new servers and kick off the helper go routines but now we have this problem that we don’t really have a good way to tell when all the servers are done because we don’t know how many servers there are and so we could try to like maintain that number as servers come in but it’s a little tricky and instead we can count the number of tasks that have finished so we just move the done sending true to done up a line so that instead of doing it per server

we now do it per task and then at the end of the loop or at the end of the function we just have to wait for the right number of tasks to be done and so so now again we sort of know uh why these are gonna the finish um there’s actually a deadlock still and that is that if the the number of tasks is um is too big actually i think always you you’ll get a deadlock and if you run this you know you get this nice thing where the dirt it tells you like hey your routines are stuck and the problem is that you know we have this run task uh

server loop and the server loop is trying to say hey i’m done and you’re trying to say hey like here’s some more work so if you have more than one task you’ll run into this deadlock where you know you’re trying to send the next task to a server i guess that is more task than servers you’re trying to send the next task to a server and all the servers are trying to say hey i’m done with the previous task but you’re not there to receive from the done channel and so again you know it’s really nice

that the the guardians just hang around and wait for you to look at them and we can fix this one way to fix this would be to add a separate loop that actually does a select that either sends some work or accounts for some of the work being done that’s fine but a cleaner way to do this is to take the the work sending loop the task sending loop and put it in its own go routine so now it’s running independently of the counting loop and the counting loop can can run and you know unblock servers that are done with certain tasks while

other tasks are still being sent but the simplest possible fix for this is to just make the work channel big enough that you’re never gonna run out of space because we might decide that you know having a go routine per task is you know a couple kilobytes per task but you know an extra inch in the channel is eight bytes so probably you can spend eight bytes per task and so if you can you just make the work channel big enough that you know that all the sends on work are going to never block and you’ll always get down to the the counting loop

at the end pretty quickly and so doing that actually sets us up pretty well for the other wrinkle in the lab which is that sometimes calls can time out and here i’ve modeled it by the call returning a false so just say hey it didn’t work um and so you know in run task it’s really easy to say like if it’s really easy to say like if the call uh fails then or sorry if the call succeeds then you’re done but if it fails just put the task back on the work list and because it’s a queue not a stack

putting it back on the work list is very likely to hand it to some other server um and so that will you know probably succeed because it’s some other server i mean this is all kind of hypothetical but um uh it’s a really you know it fits really well into the structure that we’ve created all right and the final change is that because the server guarantees are sending on work we do have to uh wait to close it until we know that they’re done sending and uh because again you can’t close you know before they finish sending

and so we just have to move the close until after we’ve counted that all the tasks are done um and you know sometimes we get to this point and people ask like why can’t you just kill go routines like why not just be able to say look hey kill all the server guardians at this point we know that they’re not needed anymore and the answer is that you know the go routine has state and it’s interacting with the rest of the program and if it all of a sudden just stops it’s sort of like it hung right and

maybe it was holding a lock maybe it was in the middle of some sort of communication with some other guru team that was kind of expecting an answer so we need to find some way to tear them down more gracefully and that’s by telling them explicitly hey you know you’re done you can you can go away and then they can clean up however they need to clean up um you know speaking of cleaning up there’s there’s actually one more thing we have to do which is to shut down the loop that’s that’s watching for new

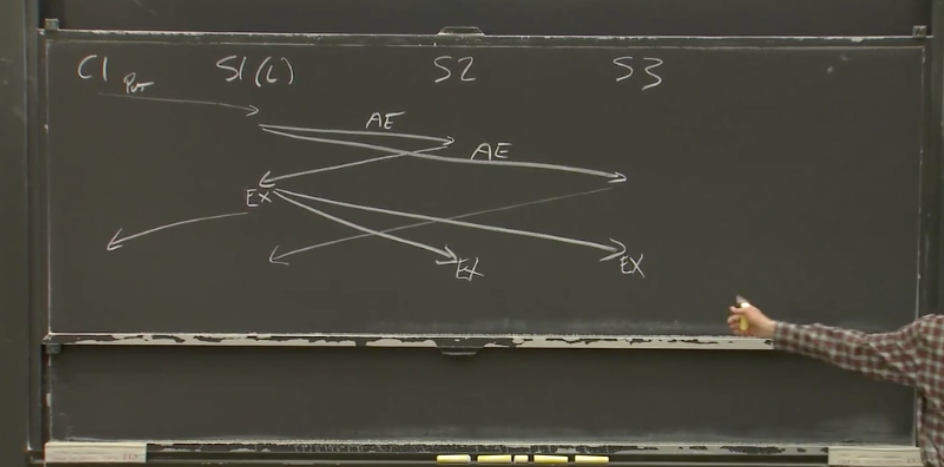

servers and so we do have to put a select in here where uh you know the the thing that’s waiting for new servers on the server channel we have to tell it okay we’re done just like stop watching for new servers because all the servers are gone um and we could make this the caller’s problem but but this is actually fairly easy to do all right so um pattern number three which is a a client for a replicated server of service so here’s the interface that we want to implement we have some service that we want that is replicated for

reliability and it’s okay for a client to talk to any one of these servers and so the the replicated client is given a list of servers the uh the arguments to init is a list of servers and a function that lets you call one of the servers with a particular argument set and get a reply and then being given that during init the replicated client then provides a call method that doesn’t tell you what server it’s going to use it just finds a good server to use and it keeps the same keeps using the same server for as long as it can until

it finds out that that server is no good so in this situation there’s almost no shared state that you need to isolate and so like the only state that persists from one call to the next is what server did i use last time because i’m going to try to use that again so in this case that’s totally fine for a mutex i’m just going to leave it there it’s always okay to use mutex if that’s the cleanest way to write the code you know some people get the wrong impression from how much we talk about

channels but it’s always okay to use a mutex if that’s all you need so now we need to implement this replicated call method whose job is to try sending to lots of different servers right but but first to try the the original server so so what does it mean if you know the try fails well there’s like no clear way for it to fail above it just always returns a reply and so the only way it can fail is if it’s taking too long so we’ll assume that if it takes too long that means it failed so in order to deal with timeouts we

have to run that that code in the background in a different go routine so we can do something like this um where we set a timeout we create a timer and then we use the go routine to send in the background and then at the end we wait and either we get the timeout or we get the actual reply if we get the actual reply we return it if we get the timeout we have to do something we’ll have to figure out what to do um it’s worth pointing out that you have to call tdot stop because otherwise the timer sits in a timer queue that you

know it’s going to go off in one second and so you know if this call took a millisecond and you have this timer that’s going to sit there for the next second and then you do this in a loop and you get a thousand timers sitting in that that um that queue before they start actually you know um disappearing and so this is kind of a wart in the api but it’s been there forever and we’ve never fixed it um and and so you just have to remember to call stop uh and then you know now we have to figure out what do we do in the case of

the timeout and so in the case of the timeout we’re going to need to try a different server so we’ll write a loop and we’ll start at um the id that id0 it says and you know if a reply comes in that’s great and otherwise we’ll reset the timeout and go around the loop again and try sending to a different server and notice there’s only one done channel in this program and so you know on the third iteration of the loop we might be waiting and then finally the first server gives us a reply that’s totally fine we’ll

take that reply that’s great um and so then we’ll stop and return it and but if we get all the way through the loop it means that we’ve sent the request to every single server in which case there’s no more timeouts we just have to wait for one of them to come back and so that’s the the plain receive and the return at the end and then it’s important to notice that the done channel is buffered now so that if you know you’ve sent the result to three different servers you’re going to take the first reply and

return but the others are going to want to send responses too and we don’t want those go routines to just sit around forever trying to send to a channel that we’re not reading from so we make the buffer big enough that they can send into the buffer and then go away and the channel just gets garbage collected that says like why can’t the timer just be garbage collected when nobody’s referencing it instead of having to to wait when it goes off when you said that you have multiple waiting if it goes off in one

millisecond yeah the the problem is the timer is referenced by the the run time it’s in the list of active timers and so calling stop takes it out of the list of active timers and and so like that’s arguably kind of a wart in that like in the specific case of a timer that’s like only going to ever get used in this channel way like we could have special case that by like having the channel because inside the timer is this t.

c channel right so we could have had like a different kind of channel implementation that inside had a bit that said hey i’m a timer channel right and and and then like the select on it would like know to just wait but if you just let go of it it would just disappear we’ve kind of like thought about doing that for a while but we never did and so this is like the state of the world um but but you know the garbage collector can’t distinguish between you know the reference inside the runtime and the reference and the rest

of the program it’s all just references and so until we like special case that channel in some way like we we can’t actually get rid of that thank you sure so um so then the only thing we have left is to have this preference where we try to use the same um id that we did the previous time and so to do that preference um we you know had the server id coming back in the reply anyway in the result channel and so you know we do the same sort of loop but we loop over an offset from the actual id we’re going to use which is

the pre the preferred one and then when we get an answer we uh set the preferred one to where we got the answer from and then we reply and you’ll notice that i used a go to statement that’s okay if you need to go to it’s fine um it’s not sort of there’s no zealotry here all right so uh the fourth one and then we’ll we’ll do some questions um is a protocol multiplexer and this is kind of the logic of a core of any rpc system and and this comes up a lot i feel like i wrote a lot of these in grad school

and sort of years after that and so the basic api of a protocol multiplexer is that it sits in from some service which we’re going to pass to the init method and then having been initialized with a service you can call and you can call call and give it a message a request message and then it’ll you know give you back the reply message at some point and the things it needs from the service to do multiflexing is that given a message it has to be able to pull out the tag that uniquely identifies the message

and and will identify the the reply because it will come back in with a matching tag and then it needs to be able to send a message out and to receive you know a message but the send and receive um are there arbitrary messages that are not matched it’s the multiplexer’s job to actually match them so um to start with we’ll have a go routine that’s in charge of calling send and another group team that’s in charge of calling receive both in just a simple loop and so to initialize the service we’ll

set up the structure and then we’ll kick off the send loop and the receive loop and then we also have a map of pending requests and the map it maps from the tag that we saw the id number in the messages to a channel where the reply is supposed to go the send loop is fairly simple you just range over the things that need to be sent and you send them and this just has the effect of serializing the calls to send because we’re not going to force the service implementation to you know deal with us sending you know from multiple

routines at once we’re serializing it so that it can just be thinking of you know sending one one packet at a time and then the receive loop uh is a little bit more complicated it pulls a receive it pulls a reply off the the service and again they’re serialized so we’re only reading one at a time and then it pulls the tag out of the reply and then it says ah i need to find the channel to send this to uh so it pulls the channel out of the pending map it takes it out of the pending map so that you know if we

accidentally get another one we won’t try to send it and then it sends the reply and then to do a call you just have to set yourself up in the map and then hand it to send and wait for the reply so we start off we get the tag out we make our own done channel we insert the tag into the map after first checking for bugs and then we send the the argument message to send and then we wait for the reply to come in undone it’s very very simple i mean like i used to write these sort of things in c and it was it was much much worse

so that was all the patterns that i wanted to show and um you know i hope that those end up being useful for you in whatever future program you’re writing and and i hope that they’re you know just sort of good ideas even in non-go programs but that you know thinking about them and go can help you when you go to do other things as well so i’m gonna put them all back up and then um i have some questions that fran sent that were you know from all of you and um we’ll probably have some time for uh you know questions from from the chat

as well i have no idea in zoom where the chat window is so when we get to that people can just speak up just i don’t use zoom on a daily basis unfortunately um so uh and and normally i know how to use zoom like regularly but with with the presentation it’s like zoom is in this minimize thing that doesn’t have half the things i’m used to anyway um someone asked how long ago took and so far it’s been about 13 and a half years we started discussions in late september 2007 i joined full-time in august 2008 when i

finished at mit we did the initial open source launch november 2009 we released go one the sort of first stable version in october 2011. uh or sorry the plan was october 2011. go one itself was march 2012. and then we’ve just been on you know it’s a regular schedule since then the next major change of course is is going to be generics and um and adding generics and that’s probably going to be go 118 which is going to be next in february someone asked you know how big a team does it take to build a language like go

and you know for those first two years there were just five of us and and that was enough to get us to uh you know something that we released that actually could run in production but it was fairly primitive um you know it was it was a good prototype it was a solid working prototype but but it wasn’t like what it is today and over time we’ve expanded a fair amount now we’re up to something like 50 people employed directly or employed by google to work directly on go and then there’s tons of open source

contributors i mean there’s literal cast of thousands that have helped us over the last 13 years and there’s absolutely no way we could have done it even with 50 people without all the different contributions from the outside someone asked about design priorities um and and motivations and you know we we built it for us right the priority was to build something that was gonna help google and it just turned out that google was like a couple years ahead we were just in a really lucky spot where google was a

couple years ahead of the rest of the industry on having to write distributed systems right now everyone using cloud software is is writing programs that talk to other programs and sending messages and you know there’s hardly any single machine programs anymore and so you know we sort of locked into at some level you know building the language that we that the rest of the world needed a couple years later and and then the other thing that that was really a priority was making it work for large numbers of programmers and because

you know google had a very large number of programmers working in one code base and and now we have open source where you know even if you’re a small team you’re depending on code that’s written by a ton of other people usually and so a lot of the the issues that come up with just having many programmers still come up in that context so those were really the things we were trying to solve and you know for all of these things we we took a long time before we were willing to actually commit to putting something in the

language like everyone basically had to agree in the the core original group and and so that meant that it took us a while to sort of get the pieces exactly the way we wanted them but once we got them there they’ve actually been very stable and solid and really nice and they work together well and and the same thing is kind of happening with generics now where we actually feel i feel personally really good about generics i feel like it feels like the rest of go and that just wasn’t the case for the proposals

that we had you know even a couple years ago much less the you know early ones uh someone said they they really like defer uh which is unique to language and and i do too thank you um but i wanted to point out that you know we we did absolutely you know create defer for go but um swift has adopted it and i think there’s a proposal for sipos bus to adopt it as well so you know hopefully it kind of moves out a little bit there was a question about um go and using capitalization for exporting and which i know is like something that

uh you know sort of is jarring when you first see it and and the story behind that is that well we needed something and we knew that we would need something but like at the beginning we just said look everything’s exported everything’s publicly visible we’ll deal with it later and after about a year it was like clear that we needed some way to you know let programmers hide things from other programmers and you know c plus plus has this public colon and private colon and in a large struct it’s actually

really annoying that like you’re looking you’re in the you’re looking at definitions and you have to scroll backwards and try to find where the like most recent public colon or private colon was and if it’s really big it can be hard to find one and so it’s like hard to tell whether a particular definition is public or private and then in java of course it’s at the beginning of every single field and that seemed kind of excessive too it’s just too much typing and so we looked around some more and

and someone pointed out to us that well python has this convention where you put an underscore in front to make something hidden and that seemed interesting but you probably don’t want the default to be not hidden you want the default to be hidden um and then we thought about well we could put like a plus in front of names um and then someone suggested well like what about uppercase could be exported and it seemed like a dumb terrible idea it really did um but as you think about it like i really didn’t like this idea um and i

have like very clear memory of sitting of like the room and what i was staring at as we discussed this uh but i had no logical argument against it and it turned out it was fantastic it was like it seemed bad it just like aesthetically but it is one of my favorite things now about go that when you look at a use of something you can see immediately you get that bit of is this something that other people can access or not at every use because if you know you see code calling a function to do you know whatever it is that it does you

think oh wow like can other people do that and and you know your brain sort of takes care of that but now i go to c plus and i see calls like that and i get really worried i’m like wait is that is that something other classes can get at um and having that bid actually turns out to be really useful for for reading code a couple people asked about generics if you don’t know we have an active proposal for generics we’re actively working on implementing it we hope that the the release later in the year

uh towards the end of the year will actually have you know a full version of generics that you can you can actually use the the um that’ll be like a preview release the real release that we hope it will be in is go 118 which is february of next year so maybe next class uh we’ll actually get to use generics we’ll see but i’m certainly looking forward to having like a generic min and max the reason we don’t have those is that you’d have to pick which type they were for or have like a whole suite of them

and it just seemed silly it seemed like we should wait for generics um someone asked is there any area of programming where go may not be the best language but it’s still used and and the answer is like absolutely like that happens all the time with every language um i think go is actually really good all around language um but you know you might use it for something that’s not perfect for just because the rest of your program is written and go and you want to interoperate with the rest of the program so you know there’s this website called

the online encyclopedia of integer sequences it’s a search engine you type in like two three five seven eleven and it tells you those are the primes um and it turns out that the back end for that is all written and go and if you type in a sequence it doesn’t know it actually does some pretty sophisticated math on the numbers all with big numbers and things like that and all of that is written in go to because it was too annoying to shell out to maple and mathematica and sort of do that cross-language thing

even though you’d much rather implement it in those languages so you know you run into those sorts of compromises all the time and that’s fine um someone asked about uh you know go is supposed to be simple so that’s why there’s like no generics and no sets but isn’t also for software developers and don’t software developers need all this stuff and you know it’s silly to reconstruct it and i think that’s it’s true that there’s someone in tension but but simplicity in the sense of leaving

things out was not ever the goal so like for sets you know it just seemed like maps are so close to sets you just have a set a map where the value is empty or a boolean that’s a set and for generics like you have to remember that when we started go in 2007 java was like just finishing a true fiasco of a rollout of generics and so like we were really scared of that we knew that if we just tried to do it um you know we would get it wrong and we knew that we could write a lot of useful programs without generics

and so that was what we did and um and we came back to it when you know we felt like okay we’ve you know spent enough time writing other programs we kind of know a lot more about what we need from from generics for go and and we can take the time to talk to real experts and i think that you know it would have been nice to have them five or ten years ago but we wouldn’t have had the really nice ones that we’re going to have now so i think it was probably the right decision um so there was a question about go

routines and the relation to the plan line thread library which which was all cooperatively scheduled and whether go routines were ever properly scheduled and like if that caused problems and it is absolutely the case that like go and and the go routine runtime were sort of inspired by previous experience on plan nine there was actually a different language called aleph on an early version plan nine that was compiled it had channels it had select it had things we called tasks which were a little bit like our teens but it

didn’t have a garbage collector and that made things really annoying in a lot of cases and also the way that tasks work they were tied to a specific thread so you might have three tasks in one thread and two tasks and another thread and in the three tasks in the first thread the only one ever ran at a time and they could only reschedule during a channel operation and so you would write code where those three tasks were all operating on the same data structure and you just knew because it was in your head when you wrote it

that you know it was okay for these two different tasks to be scribbling over the same data structure because they could never be running at the same time and meanwhile you know in the other thread you’ve got the same situation going on with different data and different tasks and then you come back to the same program like six months later and you totally forget which tasks could write to different pieces of data and i’m sure that we had tons of races i mean it was just it was a nice model for small programs

and it was a terrible model for for programming over a long period of time or having a big program that other people had to work on so so that was never the model for go the model for go was always it’s good to have these lightweight go routines but they’re gonna all be running independently and if they’re going to share anything they need to use locks and they need to use channels to commute to communicate and coordinate explicitly and and that that has definitely scaled a lot better than any of the planned line stuff ever

did um you know sometimes people hear that go routines are cooperatively scheduled and they they think you know something more like that it’s it’s true that early on the go routines were not as preemptively scheduled as you would like so in the very very early days the only preemption points when you called into the run time shortly after that the preemption points were any time you entered a function but if you were in a tight loop for a very long time that would never preempt and that would cause like garbage

collector delays because the garbage collector would need to stop all the go routines and there’d be some guaranteeing stuck in a tight loop and it would take forever to finish the loop um and so actually in the last couple releases we finally started we figured out how to get um unix signals to deliver to threads in just the right way so that and we can have the right bookkeeping to actually be able to use that as a preemption mechanism and and so now things are i think i think the preemption delays for garbage

collection are actually bounded finally but but from the start the model has been that you know they’re running preemptively and and they don’t get control over when they get preempted uh as a sort of follow-on question someone else asked uh you know where they can look to in the source tree to learn more about guru teams and and the go team scheduler and and the answer is that you know this is basically a little operating system like it’s a little operating system that sits on top of the other operating system instead of on

top of cpus um and so the first thing too is like take six eight two eight which is like there i mean i i worked on 6828 and and xv6 like literally like the year or two before i went and did the go run time and so like there’s a huge amount of 688 in the go runtime um and in the actual go runtime directory there’s a file called proc.

go which is you know proc stands for process because like that’s what it is in the operating systems um and i would start there like that’s the file to start with and then sort of pull on strings someone asked about python sort of negative indexing where you can write x of minus one and and that comes up a lot especially from python programmers and and it seems like a really great idea you write these like really nice elegant programs where like you want to get the last element you just say x minus one but the real problem is that like you

have x of i and you have a loop that’s like counting down from from you know n to zero and you have an off by one somewhere and like now x of minus one instead of being you know x of i when i is minus one instead of being an error where you see like immediately say hey there’s a bug i need to find that it just like silently grabs the element off the other end of the array and and that’s where you know the sort of python um you know simplicity you know makes things worse and so that was why we left it out

because it was it was gonna hide bugs too much we thought um you know you could imagine something where you say like x of dollar minus one or len minus one not len of x but just len but you know it seemed like too much of a special case and it really it doesn’t come up enough um someone asked about uh you know what aspect of go was hardest to implement and honestly like a lot of this is not very hard um we’ve done most of this before we’d written operating systems and threading libraries and channel implementations

and so like doing all that again was fairly straightforward the hardest thing was probably the garbage collector go is unique among garbage collected languages in that it gives programmers a lot more control over memory layout so if you want to have a struct with two different other structs inside it that’s just one big chunk of memory it’s not a struct with pointers to two other chunks of memory and because of that and you can take the address of like the second field in the struct and pass that around

and that means the garbage collector has to be able to deal with a pointer that could point into the middle of an allocated object and that’s just something that java and lisp and other things just don’t do um and so that makes the garbage collector a lot more complicated in how it maintains its data structures and we also knew from the start that you really want low latency because if you’re handling network requests uh you can’t you know just pause for 200 milliseconds while and block all of those

in progress requests to do a garbage collection it really needs to be in you know low latency and not stop things and we thought that multicore would be a good a good opportunity there because we could have the garbage collector sort of doing one core and the go program using the other cores and and that might work really well and that actually did turn out to work really well but it required hiring a real expert in garbage collection to uh like figure out how to do it um and make it work but but now it’s it’s really great um i

i have a quick question yeah you said um like if it’s struck like it’s declared inside another stroke it actually is all a big chunk of memory yeah why do why did you implement it like that what’s the reasoning behind that um i well so there’s a couple reasons one is for a garbage collector right it’s a service and the load on the garbage collector is proportional to the number of objects you allocate and so if you have you know a struct with five things in it you can make that one allocation that’s like a fifth of

the the load on the garbage collector and that turns out to be really important but the other thing that’s really important is cache locality right like if you have the processor is pulling in chunks of memory in like you know 64 byte chunks or whatever it is and it’s much better at reading memory that’s all together than reading memory that’s scattered and so um you know we have a git server at google called garrett that is written in java and it was just starting at the time that go was you know just coming out and and

we we just missed like garrett being written and go i think by like a year um but we talked to the the guy who had written garrett and he said that like one of the biggest problems in in garrett was like you have all these shot one hashes and just having the idea of 20 bytes is like impossible to have in java you can’t just have 20 bytes in a struct you have to have a pointer to an object and the object like you know you can’t even have 20 bytes in the object right you have to declare like five different ins or

something like that to get 20 bites and there’s just like no good way to do it and and it’s just the overhead of just a simple thing like that really adds up um and so you know we thought giving programmers control over memory was really important um so another question was was about automatic parallelization like for loops and things like that we don’t do anything like that in the standard go tool chain there are there are go compilers for go front ends for gcc and llvm and so to the extent that those do those

kind of loop optimizations in c i think you know we get the same from the go friends for those but it’s it’s not the kind of parallelization that we typically need at google it’s it’s more um you know lots of servers running different things and and so you know that sort of you know like the sort of big vector math kind of stuff doesn’t come up as much so it just hasn’t been that important to us um and then the last question i have written now is that someone uh asked about like how do you decide when

to acquire release locks and why don’t you have re-entry locks and for that i want to go back a slide let me see yeah here so like you know during the lecture i said things like the lock pro like new protects the map or it protects the data but what we really mean at that point is that we’re saying that the lock protects some collection of invariants that apply to the data or that are true of the data and the reason that we have the lock is to to protect the operations that depend on the invariants and that sometimes temporarily

invalidate the invariants from each other and so when you call lock what you’re saying is i need to make use of the invariance that this lock protects and when you call unlock what you’re saying is i don’t need them anymore and if i temporarily invalid invalidated them i’ve put them back so that the next person who calls lock will see you know correct invariants so in the mux you know we want the invariant that each registered pending channel gets at most one reply and so to do that when we take don out of the map

we also delete it from the map before we unlock it and if there was some separate kind of cancel operation that was directly manipulating the map as well it could lock the it could call lock it could take the thing out call unlock and then you know if it actually found one it would know no one is going to send to that anymore because i took it out whereas if you know we had written this code to have you know an extra unlock and re-lock between the done equals pending of tag and the delete then you wouldn’t have that you know

protection of the invariants anymore because you would have put things back you unlocked and relocked while the invariants were broken and so it’s really important to you know correctness to think about locks as protecting invariants and and so if you have re-entrant locks uh all that goes out the window without the re-entrant lock when you call lock on the next line you know okay the lock just got acquired all the invariants are true if you have a re-entrant lock all you know is well all the invariants were true

for whoever locked this the first time who like might be way up here on my call stack and and you really know nothing um and so that makes it a lot harder to reason about like what can you assume and and so i think reentrant locks are like a really unfortunate part of java’s legacy another big problem with re-engine locks is that if you have code where you know you call something and it is depending on the re-entrant lock for you know something where you’ve acquired the lock up above and and then at some point you say you

know what actually i want to like have a timeout on this or i want to do it uh you know in some other go routine while i wait for something else when you move that code to a different go routine re-entrant always means locked on the same stack that’s like the only plausible thing it could possibly mean and so if you move the code that was doing the re-entrant lock onto a different stack then it’s going to deadlock because it’s going to that lock is now actually going to real lock acquire and it’s going to be

waiting for you to let go of the lock i mean you’re not going to let go of it because you know you think that code needs to finish running so it’s actually like completely fundamentally incompatible with restructurings where you take code and run it in different threads or different guarantees and so so anyway like my advice there is to just you know think about locks as protecting invariants and then you know just avoid depending on reentrant locks it it really just doesn’t scale well to to real programs

so i’ll put this list back up actually you know we have that up long enough i can try to figure out how to stop presenting um and then i can take a few more questions um i had i had a question yeah um and i mean i i think coming from python like it’s very useful right it’s very common to use like like standard functional operations right like map yeah um or filter stuff like that like um like list comprehension and when you know i switched over to go and started programming it’s used i i looked it up and people

say like you shouldn’t do this do this with loop right i was wondering why um well i mean one is that like you can’t do it the other way so you might just look through the way you can do it um but uh you know a bigger a bigger issue is that well there’s that was one answer the other answer is that uh you know if you do it that way you actually end up creating a lot of garbage and if you care about like not putting too much load on the garbage collector that kind of is another way to avoid that you know so if you’ve got

like a map and then a filter and then another map like you can make that one loop over the data instead of three loops over the data each of which generate a new piece of garbage but you know now that we have generics coming um you’ll actually be able to write those functions like you couldn’t actually write what the type signature of those functions were before and so like you literally couldn’t write them and python gets away with this because there’s no no you know static types but now we’re